Metrics Snapshot

Some figures are approximate based on memory. I’m intentionally keeping this honest and measurable.

Problem

Small business owners often don’t have the time, tools, or people to consistently monitor their online presence (Facebook, Yelp, Google, etc.). Reviews can go unnoticed for long periods, and even when owners read them, it’s not always clear what to do next.

Who felt it most

The buyer and end-user were typically the same: the business owner. In practice, the pain wasn’t strong enough or urgent enough to create buying behavior.

Insight

The idea started as a hackathon project focused on gyms and fitness centers. A local gym had a large volume of negative reviews with little visible response or improvement cycle.

Retrospective: the “window wasn’t broken enough.” We had to sell the customer on the problem first, which made selling the solution exponentially harder.

The Bet

Hypothesis: If we build a platform that consolidates review insights and provides actionable steps in one place, small business owners will pay a subscription because doing this manually is slow and inconsistent.

Biggest early assumptions:

- The problem was already top-of-mind and urgent for owners (it wasn’t).

- Believing in the problem/solution ourselves meant others would pay (it didn’t).

- We could build first, then validate demand through outbound (validation came too late).

Constraints

- Time: student schedules + summer travel split focus and sprint rhythm.

- Budget: self-funded; cautious about spending heavily without revenue.

- Bandwidth: inconsistent pace/urgency across the team (including me).

- Experience: first time building something intended to scale to many businesses.

- Riskiest unknown: product-market fit (would anyone actually want this?)

Solution

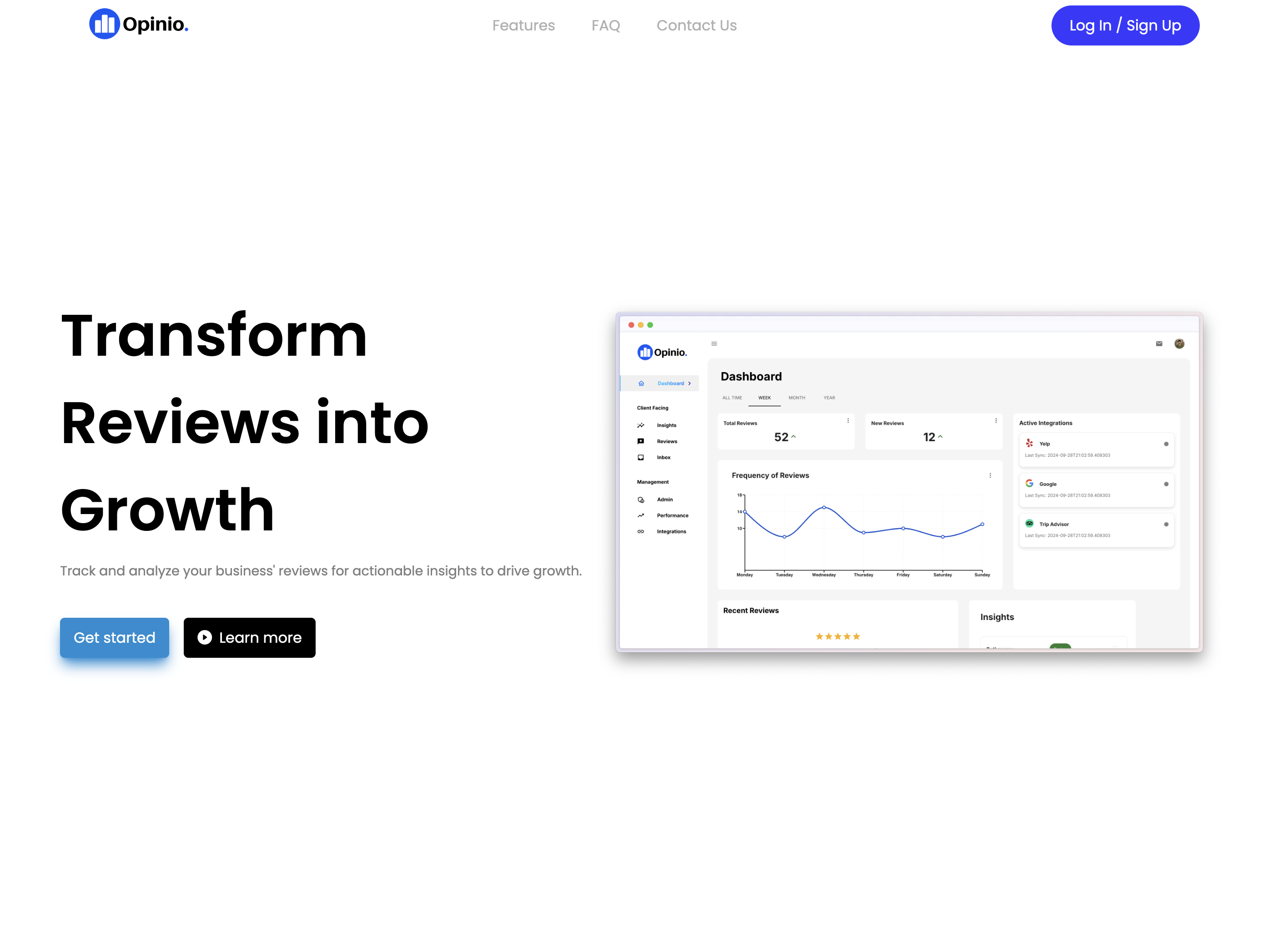

We shipped a full dashboard experience rather than a narrow MVP. The product was designed to minimize time spent in the tool: land on the dashboard → see what’s going well → see what’s going wrong → view suggested improvements.

Key features

- Review analysis: summarize themes across reviews.

- Actionable insights: suggested improvements based on review patterns.

- AI review response generation: planned/partially explored, but did not fully ship due to bandwidth.

Scope decisions

- Shipped: the entire dashboard at once (breadth over validation speed).

- Didn’t build: automated review reply tool and competitor comparisons (bandwidth).

- Regret: a proprietary “score” to judge performance —> removed later due to weak reasoning behind it.

Tech (high-level)

This is intentionally summarized. The story is product + GTM + learning, not tooling.

- Frontend: React 18, MUI/NextUI, Redux Toolkit, Recharts, TailwindCSS, Framer Motion

- Backend: Python/Flask

- Data: DynamoDB

- Async: Redis Queue

- AI/ML: Azure OpenAI, NLTK, scikit-learn, HDBSCAN

- Infra: Docker

- Auth: Clerk

Go-to-Market

Channels tested

- Outbound cold calls

- Cold emails (lead list purchased via Fiverr)

- Tools like Yesware + Yelp testing

Pricing iteration

Early pricing was modeled after US competitors: $499 USD/month. After a call where pricing was flagged as “really high,” we removed pricing from the site and switched to quotes on calls.

Retrospective: we were iterating pricing before we had proof the problem was urgent enough to pay for.

Distribution lesson

If you have to work hard to sell the user on the problem first, it becomes exponentially harder to sell them on the solution.

What went well

- Built and shipped a full product with a team (first time doing “startup-style” execution end-to-end).

- Ran real outbound attempts and learned sales reality firsthand.

- Applied to Y Combinator and learned what strong startup narratives require.

What didn’t

- Insufficient user discovery early → building happened before demand was proven.

- Quality-over-speed mindset delayed validation (we learned too late that demand was weak).

- No consistent sprint structure → misalignment, urgency mismatch, and slower execution.

Diagnosis (why it failed)

The core issue was problem selection and validation: we didn’t anchor on a specific user with a strong, existing pain. The pain existed in theory, but it wasn’t urgent or top-of-mind enough to drive buying behavior.

Kill signal: after ~2 months of outbound sales efforts, we had 0 paying customer conversions.

If I restarted today

- Start with 15–25 user interviews before building, and commit to a narrow ICP.

- Ship a tiny MVP in days (not a full dashboard) to test willingness-to-pay early.

- Validate a distribution channel before scaling product scope (prove “reach” first).

Costs (approx)

- Domain: opinio.cc — $8.49 (first year)

- Google Workspace — $25/month

- Fiverr lead list — $80

- Yesware — $35/month

- Yelp testing — $50/month (tested 2 months)

- Servers — ~$32.82 CAD (early) • later ~$56 last charge (then AWS credits)

Learnings (so far)

- User research isn’t optional: if demand isn’t proven, building is guessing.

- Speed beats polish early: quality-first delayed the truth.

- ICP clarity matters: “any business with reviews” was too broad to sell.

- Team process is product leverage: unclear cadence created drag.

- Distribution is the constraint: outbound is brutal if the pain isn’t already urgent.

Assets